Offered By: IBMSkillsNetwork

RAG with Granite 3: Build a retrieval agent using LlamaIndex

Create a retrieval augmented generation (RAG) application by using LlamaIndex and large language models (LLMs) to enhance information retrieval and generation. By integrating data retrieval with Granite LLM-powered content generation, you'll enable intuitive querying and information retrieval from diverse document sources such as PDF, HTML, and txt files. This approach simplifies complex document interactions, making it easier to build powerful, context-aware applications that deliver accurate and relevant information.

Continue readingGuided Project

Artificial Intelligence

498 EnrolledAt a Glance

Create a retrieval augmented generation (RAG) application by using LlamaIndex and large language models (LLMs) to enhance information retrieval and generation. By integrating data retrieval with Granite LLM-powered content generation, you'll enable intuitive querying and information retrieval from diverse document sources such as PDF, HTML, and txt files. This approach simplifies complex document interactions, making it easier to build powerful, context-aware applications that deliver accurate and relevant information.

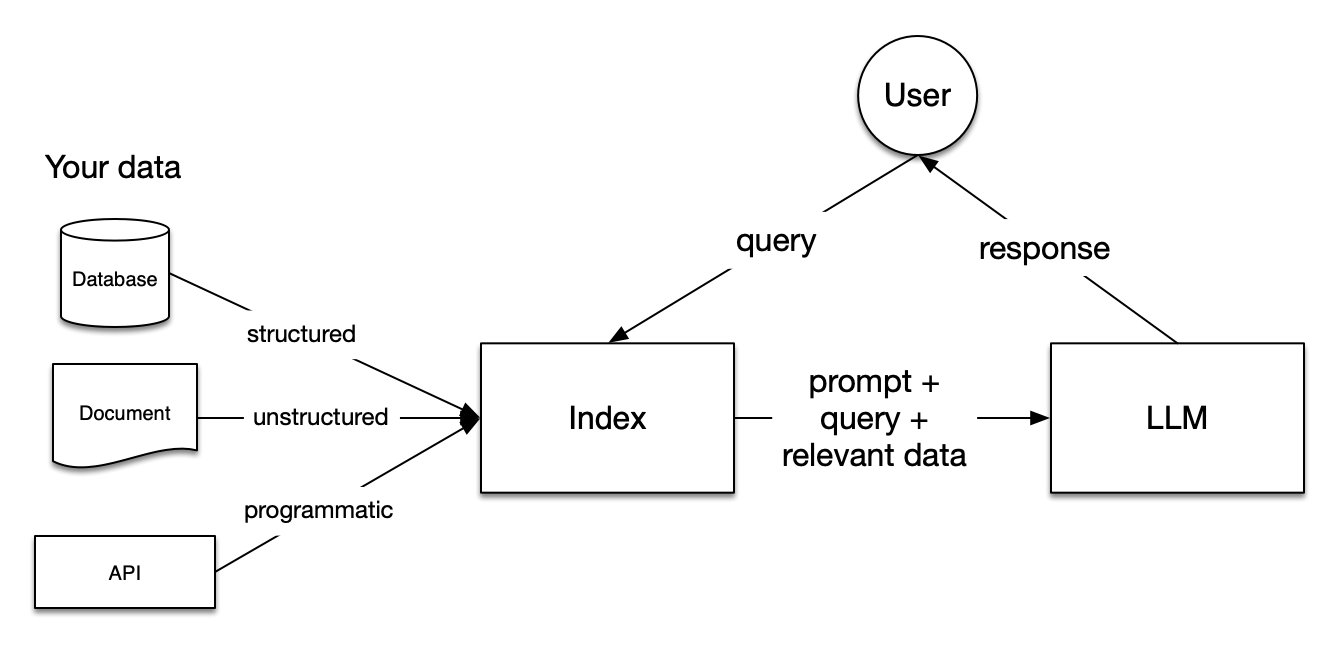

RAG framework (source: LlamaIndex)

A look at the project ahead

- Construct a RAG application: Use LlamaIndex to build a RAG application that efficiently retrieves information from various document sources.

- Load, index, and retrieve data: Master the techniques of loading, indexing, and retrieving data to ensure that your application accesses the most relevant information.

- Enhance querying techniques: Integrate LlamaIndex into your applications to improve querying techniques, ensuring that the responses are precise, contextually aware, and aligned with the most current data available.

What you'll need

Estimated Effort

30 Minutes

Level

Beginner

Skills You Will Learn

AI Agent, Generative AI, LlamaIndex, LLM, RAG, Vector Database

Language

English

Course Code

GPXX0TQPEN